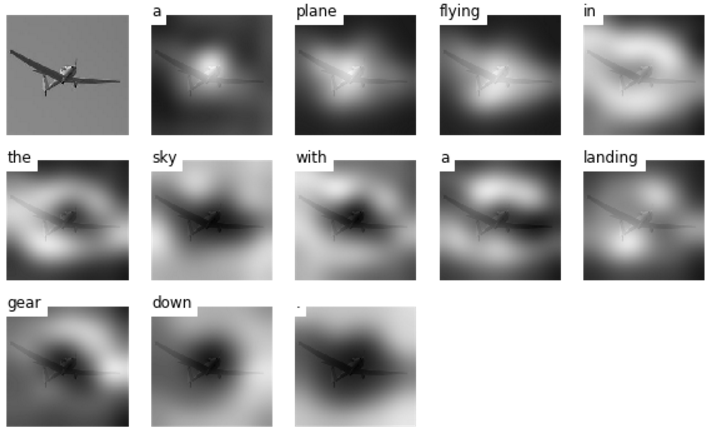

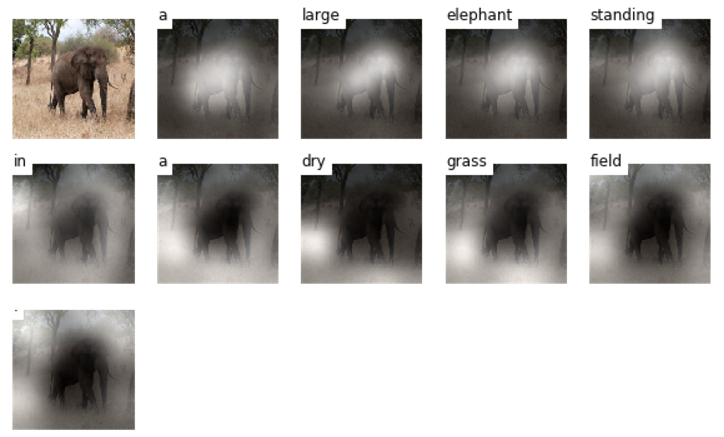

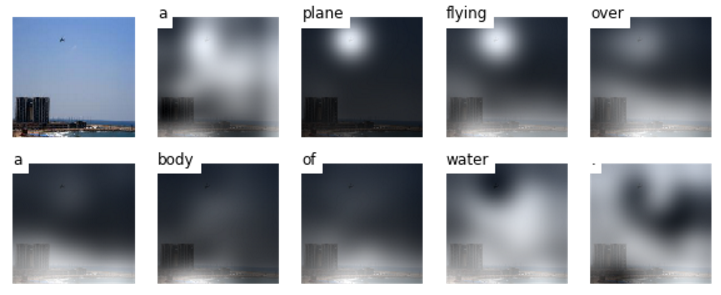

Update (December 2, 2016) TensorFlow implementation of Show, Attend and Tell: Neural Image Caption Generation with Visual Attention which introduces an attention based image caption generator. The model changes its attention to the relevant part of the image while it generates each word.

This is based on Yunjey's show-attend-and-tell repository.

First, clone this repo and pycocoevalcap in same directory.

$ git clone https://github.com/yunjey/show-attend-and-tell-tensorflow.git

$ git clone https://github.com/tylin/coco-caption.gitReplace captions_val2014.json in coco-caption/annotations/, and captions_val2014_fakecap_results.json in coco-caption/results/ when needed to change dataset.

This code is written in Python2.7 and requires TensorFlow 1.2. In addition, you need to install a few more packages to process MSCOCO data set. I have provided a script to download the MSCOCO image dataset and VGGNet19 model.

Run commands below then the images will be downloaded in image/ directory (ensure that train2014/ and val2014/ exist) and VGGNet19 model (imagenet-vgg-verydeep-19.mat) will be downloaded in data/ directory.

In addition, ensure that caption files captions_train2014.json and captions_val2014.json are stored in data/annotations/ folder.

$ cd show-attend-and-tell-tensorflow

$ pip install -r requirements.txt

$ chmod +x ./download.sh

$ ./download.shFor feeding the image to the VGGNet, you should resize the MSCOCO image dataset to the fixed size of 224x224. Run command below then resized images will be stored in image/train2014_resized/ and image/val2014_resized/ directory.

$ python resize.pyBefore training the model, you have to preprocess the MSCOCO caption dataset.

To generate caption dataset and image feature vectors, run command below.

$ python prepro.pyAdjust max_length to truncate words length, word_count_threshold to filter word frequencies.

To train the image captioning model, run command below.

$ python train.pyAdjust n_time_step to fit various sentence length.

To generate captions, visualize attention weights and evaluate the model, please see evaluate_model.ipynb.