This repository contains research code for the paper Towards Privacy-Aware Sign Language Translation at Scale.

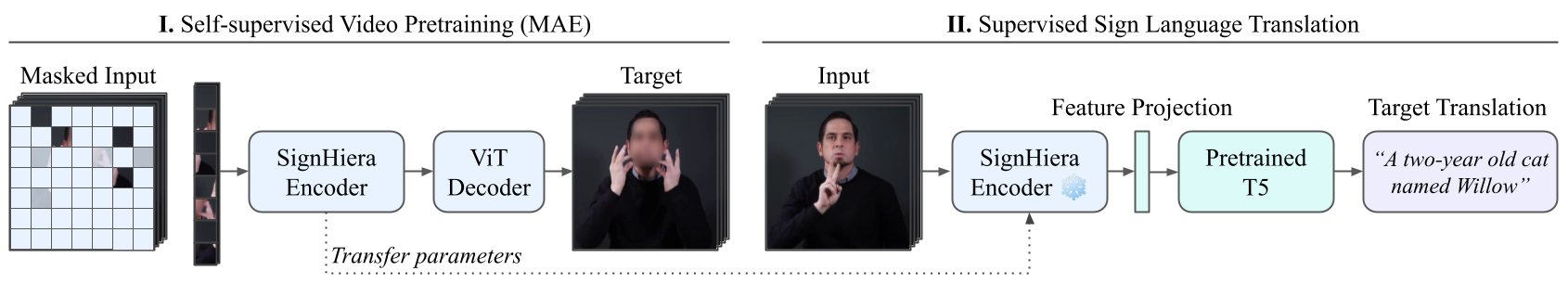

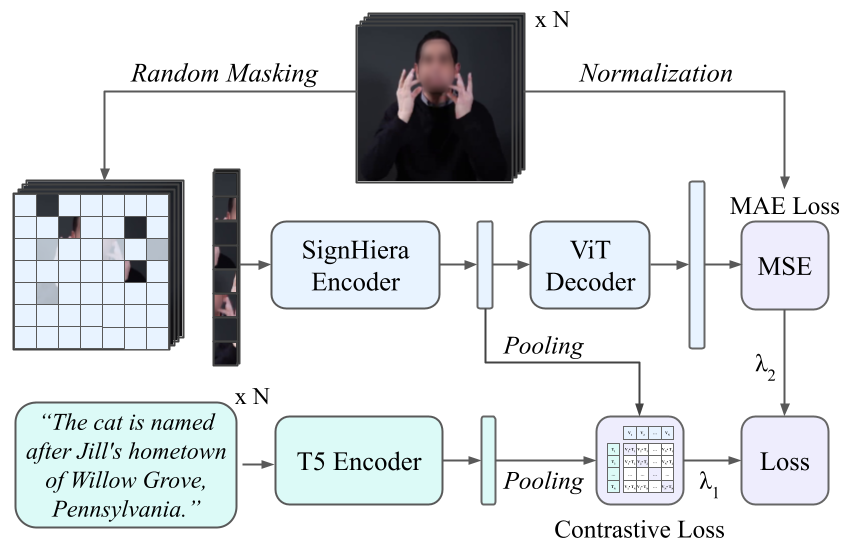

SSVP-SLT relies on masked autoencoding (MAE) on anonymized videos as a form of self-supervised pretraining to learn continuous sign language representations at scale. The learned representations are transferred to the supervised gloss-free sign language translation task. SSVP-SLT outperforms prior SOTA methods on the ASL-to-English How2Sign benchmark in the finetuned and zero-shot settings by over 3 BLEU points.

We provide installation instructions in INSTALL.md.

We describe how to prepare the datasets in DATASETS.md.

- MAE pretraining instructions are in pretraining/README.md.

- Joint MAE & CLIP/FLIP pretraining instructions are in pretraining_clip/README.md.

Instructions for feature extraction and SLT training and evaluation are in translation/README.md.

We release the DailyMoth-70h (DM-70) dataset as part of this project. DailyMoth-70h is released under a CC-BY-NC 4.0 license.

You can find an overview of the data and download and data preparation instructions in DATASETS.md.

Alternatively, download the files manually via these links:

| Subset | Link | md5 |

|---|---|---|

| Raw videos | download | 875ffe4eeac3a37e50b4202c2b4996d2 |

| Blurred clips | download | a2819c7b06a8b38eb7686e4dc90a7433 |

| Unblurred clips | download | 3e69046f6cf415cec89c3544d0523325 |

| Manifest files | download | 69e500cc5cfad3133c4b589428865472 |

[!NOTE] Check out our paper for detailed information on the DailyMoth-70h dataset.

If you find our work useful in your research, please consider citing:

@inproceedings{rust-etal-2024-towards,

title = "Towards Privacy-Aware Sign Language Translation at Scale",

author = "Rust, Phillip and Shi, Bowen and Wang, Skyler and Camgoz, Necati Cihan and Maillard, Jean",

booktitle = "Proceedings of the 62nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

year = "2024",

address = "Bangkok, Thailand",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.acl-long.467",

pages = "8624--8641",

}This codebase is heavily influenced by the mae and mae_st repositories. Our models are based on code from Hiera, HF Transformers, OpenCLIP, and Fairseq.

This project is primarily under the CC-BY-NC 4.0 license; see LICENSE for details. Portions of the project are available under separate license terms: Transformers is licensed under the Apache-2.0 license and OpenCLIP is licensed under the OpenCLIP license.