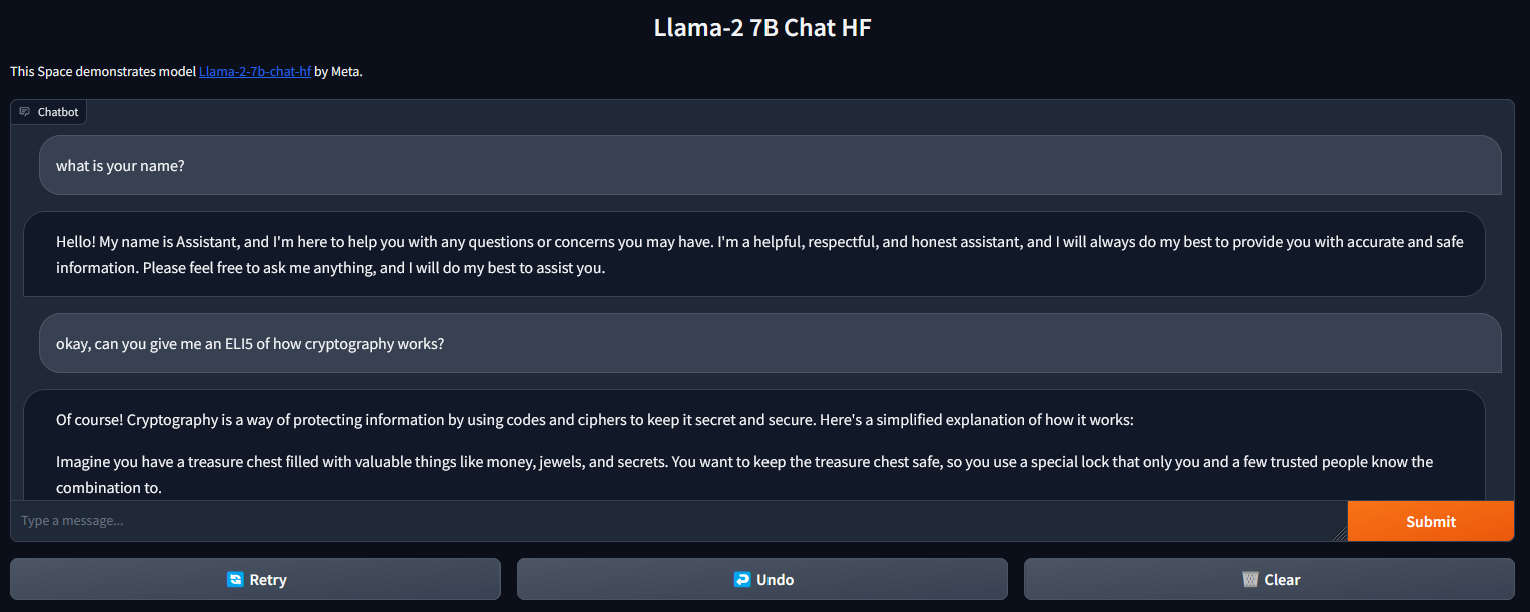

This app lets you run LLaMA v2 locally via Gradio and Huggingface Transformers. Other demos require the Huggingface inference server or require replicate, which are hosted solutions accessible through a web API. This demo instead runs the models directly on your device (assuming you meet the requirements).

| Kaggle Demo |

|---|

- You need a GPU with at least 10GB of VRAM (more is better and ensures the model will run correctly).

- You need to have access to the Huggingface

meta-llamarepositories, which you can obtain by filling out the form. - You need to create a Huggingface access token and add it as an environment variable called

HUGGINGFACE_TOKEN, e.g. to your.bashrc.

Clone this repository:

git clone https://github.com/xhluca/llama-2-local-ui

Create a virtual environment:

Install with:

pip install -r requirements.txt

Run the app:

python app.py

You can modify the content of app.py for more control. By default, it uses 4-bit inference (see blog post. It is a very simple app (~100 lines), so it should be straight forward to understand. The streaming part relies on threading and queue, but you probably won't need to worry about that unless you need to change the streaming behavior.

The app extends @yvrjsharma's original app with the addition of transformers, thread and queue.