This package contains a benchmark tool for testing the perception stack of Autoware.Universe with the Waymo dataset.

For testing 3D Object Tracking with Waymo Dataset, follow the given steps.

1- Download the Waymo dataset validation segment(.tfrecord) files from the given link. Unpack it to the desired directory.

https://waymo.com/open/download/

cd ~/Downloads/

tar -xvf validation_validation_0000.tarChange the dataset folder from "benchmark_runner.launch.py"

2- Install the Waymo Open Dataset Toolkit.

mkdir -p ~/perception_benchmark_ws/src

cd ~/perception_benchmark_ws/src

git clone https://github.com/autowarefoundation/perception_benchmark_tool.git

pip3 install protobuf==3.9.2 numpy==1.19.2

pip3 install waymo-open-dataset-tf-2-6-03- For running Autoware.Universe with the Waymo evaluation node,

Lidar point clouds and camera images are encoded in the .tfrecord file. It may take about ~60-90 seconds to decode the data back.

Go to the Autoware folder and source it,

source install/setup.bashBuild perception benchmark tool,

cd ~/perception_benchmark_ws/

rosdep install -y --from-paths src --ignore-src --rosdistro $ROS_DISTRO

colcon build

source install/setup.bashRun perception benchmark tool,

ros2 launch perception_benchmark_tool benchmark_runner.launch.pyThis command will run the perception stack with the Waymo Dataset. We will get the ground truth and prediction files in the file paths we give as arguments to the launch file.

Lidar point clouds and camera images are encoded in the .tfrecord file. It may take about ~60-90 seconds to decode the data back for each segment file.

4- Install Waymo Open Dataset toolkit for metric computation:

Follow the given command or instruction provided by the Waymo: https://github.com/waymo-research/waymo-open-dataset/blob/master/docs/quick_start.md

git clone https://github.com/waymo-research/waymo-open-dataset.git waymo-od

cd waymo-od

git checkout remotes/origin/master

sudo apt-get install --assume-yes pkg-config zip g++ zlib1g-dev unzip python3 python3-pip

BAZEL_VERSION=3.1.0

wget https://github.com/bazelbuild/bazel/releases/download/${BAZEL_VERSION}/bazel-${BAZEL_VERSION}-installer-linux-x86_64.sh

sudo bash bazel-${BAZEL_VERSION}-installer-linux-x86_64.sh

sudo apt install build-essential

./configure.sh

bazel clean

bazel build waymo_open_dataset/metrics/tools/compute_tracking_metrics_main5- Evaluate tracking result

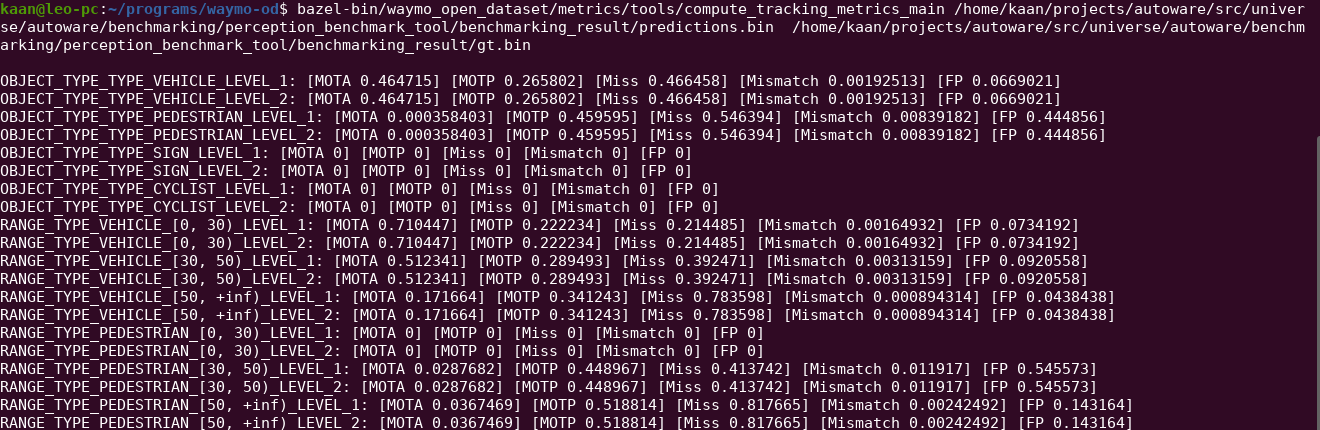

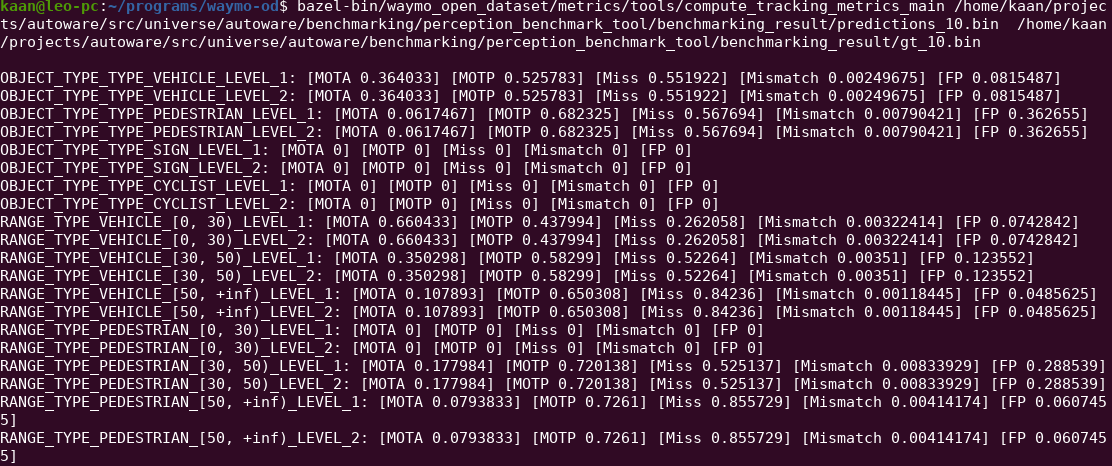

bazel-bin/waymo_open_dataset/metrics/tools/compute_tracking_metrics_main \

~/benchmark_result/predictions.bin ~/benchmark_result/gt.binThe evaluation result of the Perception pipeline on the Waymo Dataset is presented below.